so if I understand correctly, we are talking about two different aspects: CMRR and "inherent" circuit noise:

CMRR is improved using precision resistors or ICs like THAT 1240.

Correct.

CMRR (Common Mode Rejection Ratio) is only relevant where the system experiences a lot of common mode noise.

In most cases this is not a problem in studios or even live sound with normal length shielded, twisted cables. So the resistor mismatch is not very critical in this case.

The extra precision of the "laser trimmed" resistors inside IC's is thus of limited value.

Modern 1% tolerance SMD thin film resistors on a reel usually are much better tolerance on average.

You can also use multiple resistors in parallel to further improve matching. Like say instead of 12k/6k (as found in most THAT) you use two sets each of 4 X 47k in parallel and 4 X 24k in parallel. This way you get much better than 1% in practice accuracy. Done SMD the cost increase is very nominal.

If you also want high CMRR when there could be impedance mismatch between driver and receiver, than THAT "InGenious" solution is even better.

Let rephrase this. In the real world, where the output resistors in your source and the cable resistances are not laser trimmed to 0.01% tolerance you are bound to find that very high CMRR of an expensive IC is degraded to something just as bad as the discrete circuit with 1% tolerance resistors.

There are options to improve this situation with considerable increase of circuit complexity, if very high real world CMRR is really a necessary design goal.

Aside from CMRR, the circuit proposed by Mackie or by Self that I linked on post #1 are inherently noisier than the "double inverted" configuration, when adjustable gain is needed.

The Mackie Circuit is relatively noisy because of the relatively high resistor values and because the second stage (in 0dB setting) first attenuates the signal by around 10dB (while noise remains static) and then amplifies the signal AND noise by the same 10dB.

The Circuit by Mr Self mainly has poor gain control characteristics, if designed with sensible impedances. There is a lot of "interactivity" between gain and impedances. Noise is much better than Mackie, but probably still worse than double inverted, if we moderate load impedance's for the Amplifier output and keep the the input at ~10kOhm balanced.

The "double inverted" configuration decouples gain and balanced in completely.

The actual output amplifier is basically an inverted amplifier where we can set both gain and attenuation with one resistor in the feedback loop, without ANY effect on CMRR. So we are free to design our gain control absolutely freely.

Further, because the output amp is in effect a mixer amp and the second phase is inverted and added to first, we have lower value feedback resistors (less noise) and when we attenuate we also reduce circuit noise by the same amount. And because we use all op-amp's inverting, any common mode induced distortion doesn't happen in the first place.

BTW, I only recommend this circuit is the goal is a single ended output, wide range gain adjustable circuit with balanced input.

I also noted your other thread:

help me understand differences between THAT line receivers for ADC

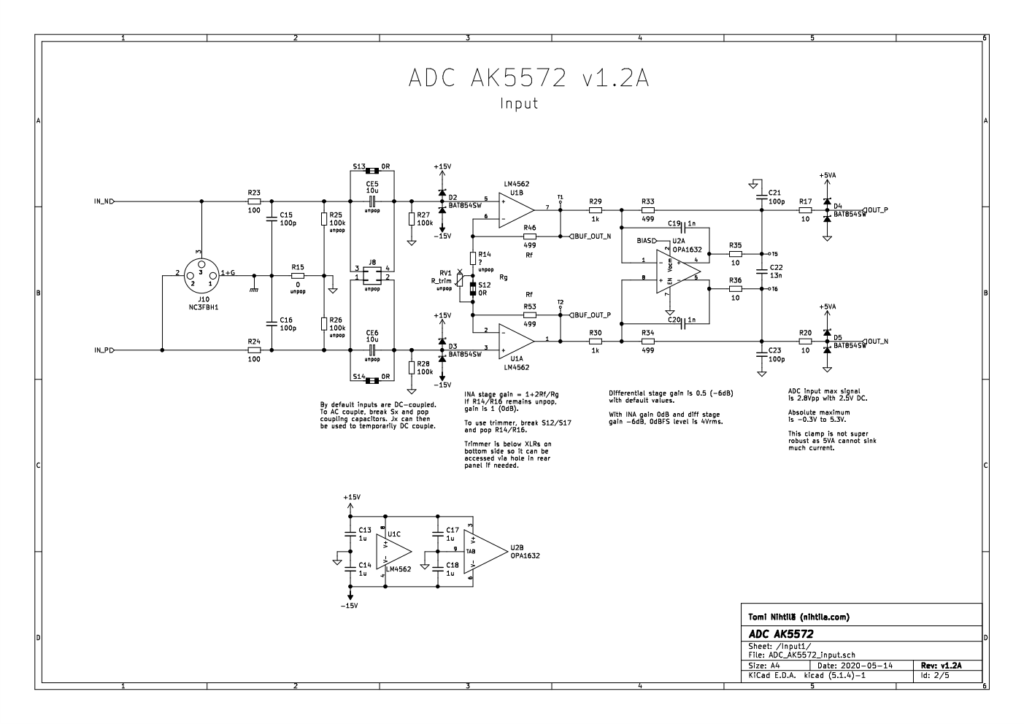

I would suggest that the "gold standard" for a balanced in, balanced out to drive ADC circuit uses either a "Fully Differential Amplifier" in "active attenuator" configuration, something like this(second part):

Pretty much any modern ADC works best from the lowest possible impedance and requires capacitors on direct input to avoid switching glitches from the ADC circuit to impact the analog stage. This circuit delivers this.

As alternative, the FDA can be be replaced with a conventional Op-Amp (dual or two singles) in the Birt circuit.

Thor

PS, as an outside bet, you can use high grade transformers for Line Input and to feed an ADC from a line. Done right and with sufficiently high quality transformers the results can exceed of active electronics, as a massive cost increase.