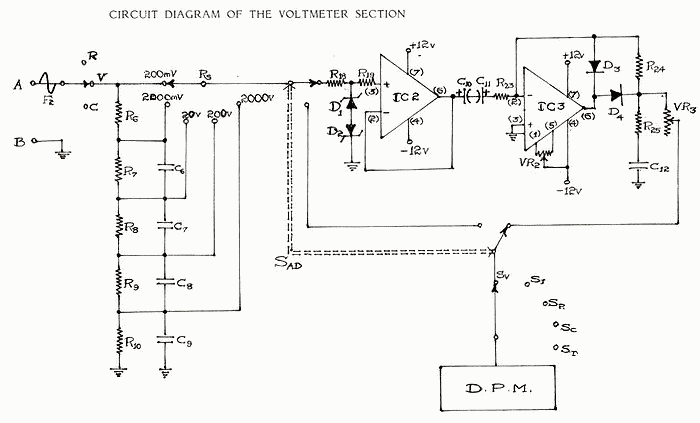

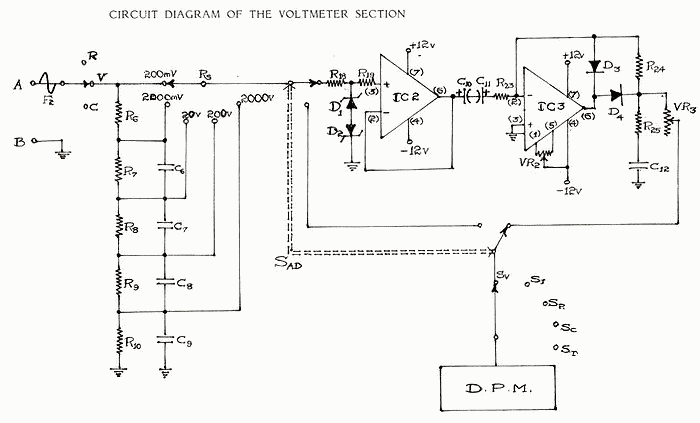

No, I get 25.3v on one side and 19v on the other. Shouldn't the capsule voltage be higher? Only asking as I'm not sure to be honest.... Because that's got at least a 1Meg (if not larger) series resistor for filtering that voltage, and your voltmeter has a finite input resistance / impedance (1-10Meg), thus forming a voltage divider.

And that's ignoring the very limited current output capability of said oscillator board...

Do you get the same 19V on both sides of R20?

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

512 Audio Skylight

- Thread starter Amled87

- Start date

Help Support GroupDIY Audio Forum:

This site may earn a commission from merchant affiliate

links, including eBay, Amazon, and others.

Shouldn't the capsule voltage be higher?

Usually it should be, but as i mentioned in my previous reply, there's a chance the measuring itself is producing false readings.

My meter is a Milwaukee TRMS with a 40m resistance. I'm assuming that's still to low to accurately read?Usually it should be, but as i mentioned in my previous reply, there's a chance the measuring itself is producing false readings.

My meter is a Milwaukee TRMS with a 40m resistance.

Close, but no. Assuming that's the 2217-40 meter, spec sheet says "Input impedance Voltage DC 10 MΩ". Considering the oscillator is supposed to supply that voltage into (in theory) infinite-ohms, it might still be too low.

Where did that "40m" figure come from, by the way? Small "m" is the SI prefix for "milli", ie. 1000x SMALLER than the reference unit. Capital "M" is the prefix for "mega", ie. one million times GREATER than the reference unit.

If you had something like a 100Meg resistor at hand (ideally not more loose than +/-10% tolerance), you could probe the voltage with that in series (the "top" resistor in the resulting divider; your meter's input impedance would then become the "bottom" resistor), and then multiply the reading by 11x to get (close to) the real voltage there.

I always forget Bout milliohms as I honestly have never used anything that small. It's the 2217-20, but I was referring to the maximum reading range which is 40M.Close, but no. Assuming that's the 2217-40 meter, spec sheet says "Input impedance Voltage DC 10 MΩ". Considering the oscillator is supposed to supply that voltage into (in theory) infinite-ohms, it might still be too low.

Where did that "40m" figure come from, by the way? Small "m" is the SI prefix for "milli", ie. 1000x SMALLER than the reference unit. Capital "M" is the prefix for "mega", ie. one million times GREATER than the reference unit

I don't havea 100M resistor, but I will have a bunch of 1G which arrive today (bought several BOM's to build a few ORS87's. Just have to pick the boards up next.If you had something like a 100Meg resistor at hand (ideally not more loose than +/-10% tolerance), you could probe the voltage with that in series (the "top" resistor in the resulting divider; your meter's input impedance would then become the "bottom" resistor), and then multiply the reading by 11x to get (close to) the real voltage there.

It's the 2217-20, but I was referring to the maximum reading range which is 40M.

And what does the maximum value of resistance it can measure, have to do with its input impedance when measuring voltages?

That's close enough to what's in the input stage of most multimeters. That string of resistors adds up to the meter's input impedance - most of the time, ~10Meg or thereabouts.

Especially when measuring "downstream" of a large-value series resistor, that and the meter's input impedance end up as a(n extra) resistive voltage divider, giving you a lower-than-expected reading.

That can also happen if the voltage source is unable to provide more than a handful of uA (microamps). To get a reading of 60V across your meter's 10Meg input impedance would require a source capable of providing at least 6uA; anything less, and you'll be dragging down the voltage you're trying to measure, again getting a lower-than-expected reading.

https://documents.milwaukeetool.com/58-14-2219D7.pdf

Page 3, lower right:

*Input impedance: Voltage DC: 10MΩ;

It helps to know one's tools, how they operate as well as their real-world limitations and/or idiosyncracies.

I don't havea 100M resistor, but I will have a bunch of 1G

1G works as well, just correct the math (1010Meg divided by 10Meg, multiplied by the voltage reading you get).

Last edited:

Thank you for the lesson Khron. I mentioned 40M because I had quickly browsed over the specs and didn't pay attention to it saying "maximum resistance" reading. I knew to look for it's input impedance, but it was the AM and I made a quick error.And what does the maximum value of resistance it can measure, have to do with its input impedance when measuring voltages?

That's close enough to what's in the input stage of most multimeters. That string of resistors adds up to the meter's input impedance - most of the time, ~10Meg or thereabouts.

Especially when measuring "downstream" of a large-value series resistor, that and the meter's input impedance end up as a(n extra) resistive voltage divider, giving you a lower-than-expected reading.

That can also happen if the voltage source is unable to provide more than a handful of uA (microamps). To get a reading of 60V across your meter's 10Meg input impedance would require a source capable of providing at least 6uA; anything less, and you'll be dragging down the voltage you're trying to measure, again getting a lower-than-expected reading.

https://documents.milwaukeetool.com/58-14-2219D7.pdf

Page 3, lower right:

It helps to know one's tools, how they operate as well as their real-world limitations and/or idiosyncracies.

1G works as well, just correct the math (1010Meg divided by 10Meg, multiplied by the voltage reading you get).

Thank you for all help, knowledge you've shared and being patient with me! When I have the 1G's I'll revisit. The mic doesn't sound bad, if anything it's a lot "less bright" than the Nova/Sterling stuff sharing the same or similar construction. I'd assume that's more so the K47 style capsule than anything though as it doesn't have the HF boost associated with K67 style capsules.

The mic doesn't sound bad, if anything it's a lot "less bright" than the Nova/Sterling stuff sharing the same or similar construction. I'd assume that's more so the K47 style capsule than anything though as it doesn't have the HF boost associated with K67 style capsules.

Most likely

Similar threads

- Replies

- 7

- Views

- 3K