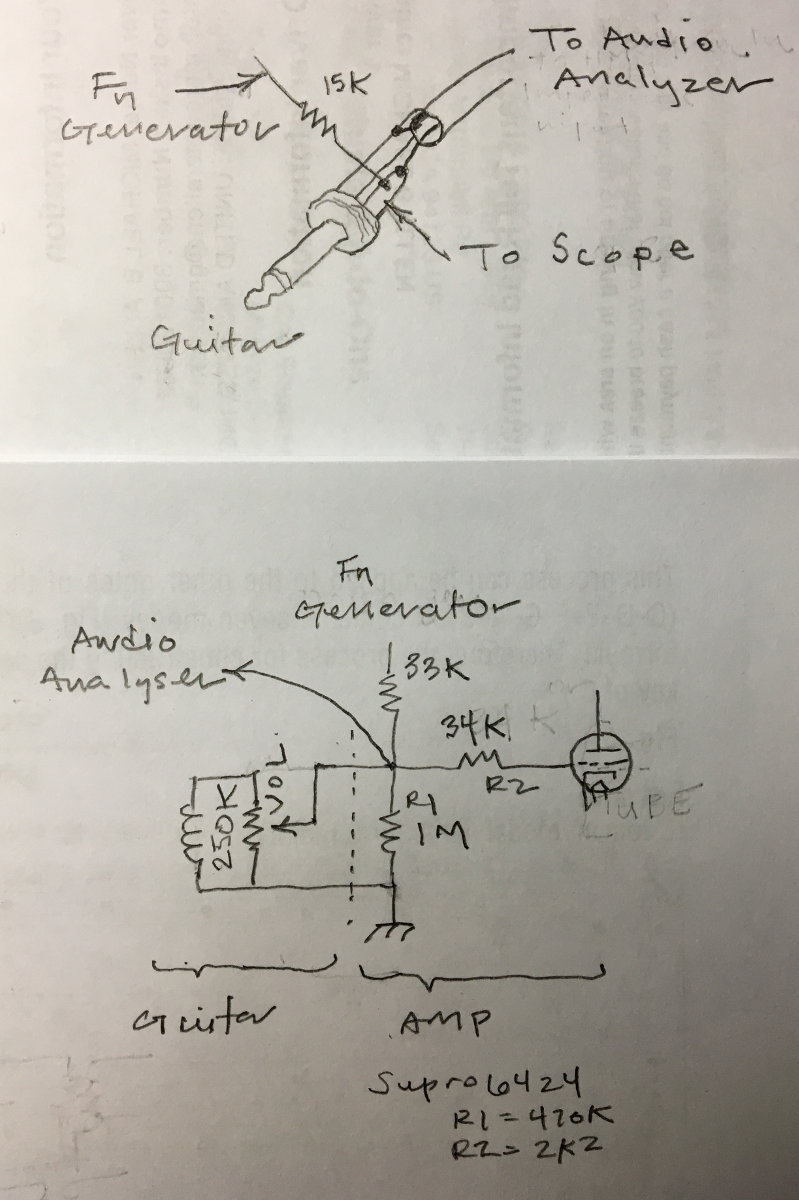

No, we haven't recommended that. The high-Z side should be receiving the line level, and the low-Z side go to the amp, which takes care of the attenuation needed to avoid overdriving the amp's input stage. In doing that, the amp is presented with a very low impedance, which is not right for the purpose of recreating the guitar-amp interaction. In order to do that, an additional LRC circuit should be added. However, the main part of using an amp is the loudspeaker/air/room interaction, that is not lost; many SE's are satisfied with that and do not go the extra mile of simulating the impedance of the guitar.

That's why we don't recommend it.

It can be done easily by inserting a resistor of a few dozens kiloohms in the output. Alternatively, you can install a 500k potentiometer there, which will also allow level trimming.

Actually, there are many possibilities in this respect, like adding the aforementioned LRC circuit.